Comparing Plagiarism Checker Tools | Methods & Results

In seeking to find the best plagiarism checker tool on the market in 2023, we conducted an experiment comparing the performance of 10 checkers. We also carried out a separate experiment comparing 10 free plagiarism checkers. There was some overlap between the two experiments in terms of the tools covered.

We focused on a series of factors in our analysis. For each tool, we analyzed the amount of plagiarism it was able to detect, the quality of matches, and its usability and trustworthiness.

This article describes our research process, explaining how we arrived at our findings.

We discuss:

- Which plagiarism checkers we selected

- How we prepared test documents

- How we analyzed quantitative results

- How we selected criteria for qualitative analysis

Plagiarism checkers analyzed

We kicked off our analysis by searching for the main plagiarism checkers on the market that can be purchased by individual users, excluding enterprise software. We decided to focus primarily on plagiarism checkers that mentioned students and/or academics as one of their target audiences.

For the free plagiarism checker comparison, we made a similar search, but specifically included the keyword “free.” All paid checkers were tested in April/May 2023, and all free checkers were tested in June 2023.

Some checkers were included in both experiments. All checkers included in either experiment are listed below:

- Scribbr (in partnership with Turnitin)

- Grammarly

- Plagiarism Detector

- PlagAware

- Pre Post SEO

- Quetext

- Smodin

- DupliChecker

- Viper

- Compilatio

- Search Engine Reports

- Writer

- Check Plagiarism

- Small SEO Tools

- Plagiarism Checker

- Copyleaks

- Editpad (failed to generate a report)

- Rewrite Guru (failed to generate a report)

- Pro Writing Aid (failed to generate a report)

Test documents

The initial unedited document consisted of 140 plagiarized sections from 140 different sources. These were equally distributed across 7 different source types, in order to assess the performance for each source type individually.

The first document was compiled from:

- 20 Wikipedia articles

- 20 news articles

- 20 open-access journal articles

- 20 restricted-access journal articles

- 20 big website articles

- 20 theses and dissertations

- 20 other PDFs

Generally speaking, open- and restricted-access journal articles, theses and dissertations, large websites, and PDFs are more relevant to students and academics. Writers and marketers might be more interested in news articles and websites. Wikipedia articles can be useful for both.

Taking this into account, it is important to distinguish between the various source types. Different users (e.g., students, academics, marketers, writers) use different sources, and have different needs with respect to plagiarism checkers.

You can request the test documents we used for our analysis by contacting us through citing@scribbr.com.

Edited test documents

For the next step in our analysis, the unedited test document underwent three levels of editing: light, moderate, and heavy. We wanted to investigate whether each tool was able to find a source text when it had been edited to varying degrees.

- Light: The original copy-pasted source text was edited by replacing 1 word in every sentence.

- Moderate: The original copy-pasted source text was edited by replacing 2–3 words in every sentence, provided the sentence was long enough.

- Heavy: The original copy-pasted source text was edited by replacing 4–5 words in every sentence, provided the sentence was long enough.

We limited our analysis to the source texts that had been detected by all the tools during the first round. Any checker that did not find plagiarism in the original text is likely not able to detect plagiarism if the original text has been altered, so we excluded those sources from analysis.

The edited test documents consisted of 15 source texts from Wikipedia, 4 from big websites, 4 from news articles, 2 from open-access journals, and 6 from PDFs.

Pre-industrial civilization dates back to centuries ago, but the main era known as the pre-industrial society occurred right before the industrial society. Pre-Industrial societies vary from region to region depending on the culture of a given area or history of social and political life. Europe was known for its feudal system and the Italian Renaissance.

Lightly edited

Pre-industrial civilization dates back to decades ago, but the main era known as the pre-industrial society occurred right before the industrial society. Pre-Industrial societies change from region to region depending on the culture of a given area or history of social and political life. France was known for its feudal system and the Italian Renaissance.

Moderately edited

Pre-industrial civilization dates back to decades ago, but the main period known as the pre-industrial society occurred just before the industrial society. Pre-Industrial societies change from region to region depending on the system of a given area or history of economic and political life. France was known for its mediaeval system and the Italian Renaissance.

Highly edited

Pre-industrial city dates back to decades ago, but the main period known as the pre-industrial society occurred just before the industrial world. Pre-Industrial societies change from region to region affecting the system of a given timeframe or history of economic and political life. France was known for its mediaeval system or the Australian Renaissance.

Procedure

We uploaded each version of the document to each plagiarism checker tool for testing. In some instances, we had to split the documents into smaller ones, since some checkers have smaller word limits per upload than others. We always avoided dividing a single source text into two documents.

Subsequently, the documents were manually evaluated by looking at each plagiarized paragraph to determine if the checker:

(0) had not been able to attribute any of the sentences to one or multiple sources

(1) had been able to attribute one or multiple sentences to one or multiple sources

(2) had been able to attribute all sentences to one source

This way, we were able to test whether checkers with a high plagiarism percentage actually had been able to find and fully match the source, or if they simply attributed a few sentences of the source text to multiple sources. This method also helped screen for false positives (e.g., highlighting non-plagiarized parts or common phrases as plagiarism).

Data analysis

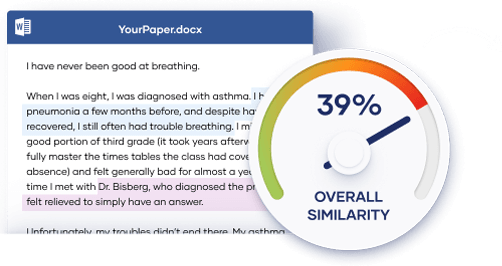

For the test document containing unedited text, we calculated the total score for each tool and divided this score by the total possible score (which was 280). We then converted this number to a percentage.

We repeated this procedure for the edited text, but this time the total possible score was 62, since we only included the 31 source texts that had been found by all plagiarism checkers.

Results

The following results indicate how much plagiarism the tools were able to detect in the unedited, lightly edited, moderately edited, and heavily edited document.

For the edited categories, we only used sources from the unedited document that were found by all plagiarism checkers during the first round. Therefore, some checkers were able to score more highly in subsequent rounds.

This table is ranked based on the unedited column, but all the results were taken into account in our analysis.

| Plagiarism checker | Paid or free? | Unedited | Lightly edited | Moderately edited | Heavily edited |

|---|---|---|---|---|---|

| Scribbr (in partnership with Turnitin) |

Paid (free version doesn’t have full report) | 84% | 98% | 87% | 63% |

| Plagaware | Paid | 71% | 87% | 48% | 23% |

| Viper | Paid | 51% | 61% | 39% | 23% |

| Small SEO Tools | Free (premium version available) | 49% | 50% | 47% | 31% |

| DupliChecker | Free (premium version available) | 48% | 50% | 47% | 32% |

| Quetext | Paid (free trial) | 48% | 63% | 63% | 23% |

| Plagiarism Detector | Free (premium version available) | 47% | 48% | 42% | 23% |

| Check Plagiarism | Paid (free trial) | 45% | 44% | 44% | 26% |

| Pre Post SEO | Paid (free trial) | 44% | 48% | 44% | 31% |

| Search Engine Reports | Free (premium version available) | 44% | 39% | 37% | 31% |

| Plagiarism Checker | Paid (free trial) | 43% | 32% | 31% | 15% |

| Grammarly | Paid | 37% | 68% | 36% | 19% |

| Writer | Paid | 34% | 42% | 24% | 8% |

| Smodin | Paid | 33% | 42% | 31% | 19% |

| Compilatio | Paid | 30% | 47% | 29% | 18% |

| Copyleaks | Paid | 20% | 45% | 42% | 27% |

Evaluating the plagiarism checker tools

Our next step was a qualitative analysis, during which quality of matches, usability, and trustworthiness were assessed, with the help of pre-set criteria. These contributed to a more standardized, objective evaluation. All plagiarism checkers were evaluated the same way.

The selected criteria cover a great deal of users’ needs:

- Quality of matches: It’s crucial for plagiarism checkers to be able to match the entire plagiarized section to the right source. Partial matches, where the checker matches individual sentences to multiple sources, result in a messy, hard-to-interpret report. False positives, where common phrases are incorrectly marked as plagiarism, are also important to consider, because these skew the plagiarism percentages.

- Usability: It’s essential that plagiarism checkers show a clear overview of potential plagiarism issues. The report should be clear and cohesive, with a clean design. It is also important that users can instantly resolve the issues, for example by adding automatically generated citations.

- Trustworthiness: It’s important for students and academics that their documents are not stored or sold to third parties. This way, they know for sure that the plagiarism check will not result in plagiarism issues when they submit their text to their educational institution or for publication. It is also important that the tool offers customer support if problems occur.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Driessen, K. (2023, June 12). Comparing Plagiarism Checker Tools | Methods & Results. Scribbr. Retrieved April 15, 2024, from https://www.scribbr.com/plagiarism/plagiarism-checker-comparison/