What is Effect Size and Why Does It Matter? (Examples)

Effect size tells you how meaningful the relationship between variables or the difference between groups is. It indicates the practical significance of a research outcome.

A large effect size means that a research finding has practical significance, while a small effect size indicates limited practical applications.

Why does effect size matter?

While statistical significance shows that an effect exists in a study, practical significance shows that the effect is large enough to be meaningful in the real world. Statistical significance is denoted by p values, whereas practical significance is represented by effect sizes.

Statistical significance alone can be misleading because it’s influenced by the sample size. Increasing the sample size always makes it more likely to find a statistically significant effect, no matter how small the effect truly is in the real world.

In contrast, effect sizes are independent of the sample size. Only the data is used to calculate effect sizes.

That’s why it’s necessary to report effect sizes in research papers to indicate the practical significance of a finding. The APA guidelines require reporting of effect sizes and confidence intervals wherever possible.

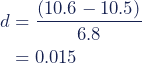

After six months, the mean weight loss (kg) for the experimental intervention group (M = 10.6, SD = 6.7) was marginally higher than the mean weight loss for the control intervention group (M = 10.5, SD = 6.8).

These results were statistically significant (p = .01). However, a difference of only 0.1 kilo between the groups is negligible and doesn’t really tell you that one method should be favored over the other.

Adding a measure of practical significance would show how promising this new intervention is relative to existing interventions.

Here's why students love Scribbr's proofreading services

How do you calculate effect size?

There are dozens of measures for effect sizes. The most common effect sizes are Cohen’s d and Pearson’s r. Cohen’s d measures the size of the difference between two groups while Pearson’s r measures the strength of the relationship between two variables.

Cohen’s d

Cohen’s d is designed for comparing two groups. It takes the difference between two means and expresses it in standard deviation units. It tells you how many standard deviations lie between the two means.

| Cohen’s d formula | Explanation |

|---|---|

|

The choice of standard deviation in the equation depends on your research design. You can use:

- a pooled standard deviation that is based on data from both groups,

- the standard deviation from a control group, if your design includes a control and an experimental group,

- the standard deviation from the pretest data, if your repeated measures design includes a pretest and posttest.

With a Cohen’s d of 0.015, there’s limited to no practical significance of the finding that the experimental intervention was more successful than the control intervention.

Pearson’s r

Pearson’s r, or the correlation coefficient, measures the extent of a linear relationship between two variables.

The formula is rather complex, so it’s best to use a statistical software to calculate Pearson’s r accurately from the raw data.

| Pearson’s r formula | Explanation |

|---|---|

|

The main idea of the formula is to compute how much of the variability of one variable is determined by the variability of the other variable.

Pearson’s r is a standardized scale to measure correlations between variables—that makes it unit-free. You can directly compare the strengths of all correlations with each other.

One caveat is that Pearson’s r, like Cohen’s d, can only be used for interval or ratio variables. Other measures of effect size must be used for ordinal or nominal variables.

How do you know if an effect size is small or large?

Effect sizes can be categorized into small, medium, or large according to Cohen’s criteria.

Cohen’s criteria for small, medium, and large effects differ based on the effect size measurement used.

| Effect size | Cohen’s d | Pearson’s r |

|---|---|---|

| Small | 0.2 | .1 to .3 or -.1 to -.3 |

| Medium | 0.5 | .3 to .5 or -.3 to -.5 |

| Large | 0.8 or greater | .5 or greater or -.5 or less |

Cohen’s d can take on any number between 0 and infinity, while Pearson’s r ranges between -1 and 1.

In general, the greater the Cohen’s d, the larger the effect size. For Pearson’s r, the closer the value is to 0, the smaller the effect size. A value closer to -1 or 1 indicates a higher effect size.

Pearson’s r also tells you something about the direction of the relationship:

- A positive value (e.g., 0.7) means both variables either increase or decrease together.

- A negative value (e.g., -0.7) means one variable increases as the other one decreases (or vice versa).

The criteria for a small or large effect size may also depend on what’s commonly found research in your particular field, so be sure to check other papers when interpreting effect size.

When should you calculate effect size?

It’s helpful to calculate effect sizes even before you begin your study as well as after you complete data collection.

Before starting your study

Knowing the expected effect size means you can figure out the minimum sample size you need for enough statistical power to detect an effect of that size.

In statistics, power refers to the likelihood of a hypothesis test detecting a true effect if there is one. A statistically powerful test is more likely to reject a false negative (a Type II error).

If you don’t ensure enough power in your study, you may not be able to detect a statistically significant result even when it has practical significance. In that case you don’t reject the null hypothesis, even though there is an actual effect.

By performing a power analysis, you can use a set effect size and significance level to determine the sample size needed for a certain power level.

After completing your study

Once you’ve collected your data, you can calculate and report actual effect sizes in the abstract and the results sections of your paper.

Effect sizes are the raw data in meta-analysis studies because they are standardized and easy to compare. A meta-analysis can combine the effect sizes of many related studies to get an idea of the average effect size of a specific finding.

But meta-analysis studies can also go one step further and also suggest why effect sizes may vary across studies on a single topic. This can generate new lines of research.

Other interesting articles

If you want to know more about statistics, methodology, or research bias, make sure to check out some of our other articles with explanations and examples.

Statistics

Methodology

Frequently asked questions about effect size

- What is effect size?

-

Effect size tells you how meaningful the relationship between variables or the difference between groups is.

A large effect size means that a research finding has practical significance, while a small effect size indicates limited practical applications.

- How do I calculate effect size?

-

There are dozens of measures of effect sizes. The most common effect sizes are Cohen’s d and Pearson’s r. Cohen’s d measures the size of the difference between two groups while Pearson’s r measures the strength of the relationship between two variables.

- What’s the difference between statistical and practical significance?

-

While statistical significance shows that an effect exists in a study, practical significance shows that the effect is large enough to be meaningful in the real world.

Statistical significance is denoted by p-values whereas practical significance is represented by effect sizes.

- What is statistical power?

-

In statistics, power refers to the likelihood of a hypothesis test detecting a true effect if there is one. A statistically powerful test is more likely to reject a false negative (a Type II error).

If you don’t ensure enough power in your study, you may not be able to detect a statistically significant result even when it has practical significance. Your study might not have the ability to answer your research question.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 22). What is Effect Size and Why Does It Matter? (Examples). Scribbr. Retrieved April 25, 2024, from https://www.scribbr.com/statistics/effect-size/